The post Safety Meets Security: Building Cyber-Resilient Systems for Aerospace and Defense appeared first on RunSafe Security.

]]>In this environment, a software glitch, a failed component, or a cyber intrusion can have the same catastrophic impact: a system that doesn’t behave as intended when lives and missions are on the line.

Patrick Miller, Product Manager at Lynx, has spent his career at the intersection of safety, security, and performance, working across aerospace, defense, enterprise cloud, and embedded systems.

In this Q&A, Patrick shares how architecture, separation, and long-term thinking can help engineers and product teams design resilient weapons systems.

Listen to the Audio Overview

1. You have a unique background that spans aerospace, defense, enterprise cloud, and now safety-critical embedded systems. What lessons from those different domains help you approach safety and security in critical systems today?

Patrick Miller: The biggest lesson is that every domain taught me something different about risk. Security is as much about governance and auditability as it is about technical controls. In defense and aerospace, I learned that resilience isn’t just about preventing failures, it’s about designing systems that degrade gracefully when something does go wrong.

But the connective tissue across all these domains is, particularly in product management, you must know your customer’s actual need, not just the problem you think you’re solving.

Early in my career, I realized that the “why” behind a security requirement often matters more than the requirement itself.

2. Safety and security are often treated as separate priorities. How do you see them intersecting, particularly in aerospace and defense?

Patrick: They are not separate priorities but two expressions of the same goal: keep the system doing what it’s supposed to do, when it’s supposed to do it, and in the face of adversity.

Safety asks, “What happens when things might fail?”

Security asks, “What happens when someone tries to make them fail?”

In aerospace, especially, legacy systems were designed in an era when the threat model was simple: physical tampering or insider threat. Now we have connected avionics and software-defined platforms with attack surfaces we didn’t have to think about ten years ago.

A safety failure and a security failure can look identical from the flight deck, and both result in the aircraft not doing what the crew intended. The intersection is in architecture. If you design a system with strong separation, say at the kernel level, between safety-critical functions and everything else, you’re solving for both.

3. The industry is moving toward software-defined platforms in aircraft and defense systems. What new risks does this introduce—and what opportunities to improve resilience?

Patrick: A key risk is that a vulnerability in one aircraft or subsystem can theoretically affect an entire fleet. However, this shift also creates the opportunity to build security and resilience from day one, rather than bolting it on afterward.

When the industry is trying to add security to 20-year-old real-time operating systems to modernize embedded platforms for customers, it’s like retrofitting a house with a new foundation. Software-defined systems let you architect with separation, modularity, and defense-in-depth from the start. You can implement zero-trust and cyber-resilience principles in real-time environments in ways you couldn’t with monolithic systems.

4. Aerospace and defense systems often have very long lifecycles. What challenges does that create for sustaining cybersecurity, and how can architecture choices mitigate some of the risks?

Patrick: This is one of the most complex problems in the industry. A crewed aircraft certified today will probably still be flying forty years from now, just as there are crewed aircraft certified forty years ago still flying today. By then, the threat landscape will have evolved dramatically. Cryptographic algorithms considered secure today may be obsolete in a post-quantum world.

The mitigation is architectural resilience. First, design with modularity so that security updates can be applied surgically to vulnerable components without recertifying the entire system. Second, implement strong separation so that compromising one module doesn’t cascade through the entire aircraft. Finally, at the program level, it means thinking about your supply chain and third-party dependencies not as static decisions, but as ongoing risk management.

5. UAVs and other unmanned systems are becoming disposable assets in some missions. How does that change the calculus of how much safety vs. security you embed at the system level?

Patrick: The step-change in the evolution of UAVs flips the traditional paradigm on its head. With a crewed aircraft, safety is paramount because at least one, or more likely, many human lives are at stake. In a contested environment, the calculus is different for a single-use tactical UAV. You might accept a higher technical risk if it means fielding new capabilities faster.

Here’s where I push back on the “disposable” framing: even if the platform is disposable, the capability often isn’t. If an adversary captures and reverse-engineers your UAV, they can gain insights into your tactics, sensors, and comm architecture, which requires constant iteration to stay ahead. So even for “disposable” systems, I think about: What’s worth protecting architecturally?

You can design a UAV that’s tactically expendable but still prevents an adversary from extracting intelligence or spoofing commands.

6. How can teams modernize embedded platforms without introducing unacceptable risk or performance tradeoffs?

Patrick: This is where data-driven prioritization really matters. The temptation is always to boil the ocean: add every new security feature, refactor the entire architecture, and implement the latest standards. Instead, measure the opportunity cost of each modernization decision. What are my top three security gaps today? What are my top three performance bottlenecks? Which modernization efforts address both?

Implementing strong separation boundaries improves real-time performance by preventing one task from blocking another, particularly in multi-core processors. At a practical level, invest in your DevSecOps pipeline early and institute automated testing, static analysis, and security scanning to build confidence to modernize faster.

7. Lynx emphasizes separation kernels and mixed-criticality architectures. What does “designing for separation” mean in practice, and how does it help balance safety and cybersecurity in real-time environments?

Patrick: “Designing for separation” means treating compartmentalization as a primary architectural concern, not an afterthought. It’s the difference between saying “we’ll secure the perimeter and hope nobody gets through” and saying “someone eventually gets through, here are the north-south and east-west limits that prevent further intrusion, here is how we know the threat actor entered and how to neutralize them.”

In practice, that means defining your trust boundaries early. What functions are safety-critical? What modules are network-connected? What functions are mission-critical but not safety-critical? A separation kernel enforces those boundaries between partitions at the hypervisor level: one partition can’t access another’s memory; one partition can’t interfere with another’s timing. In real-time systems, timing is paramount, so this isolation protects both safety and security simultaneously.

8. What emerging standards or regulations do you anticipate having the greatest impact on how manufacturers secure and certify safety-critical systems?

Patrick: The Department of Defense’s Software Fast-Track initiative is pushing contractors to adopt modern DevSecOps practices and accelerate secure software delivery timelines. We’re also watching how NIST 800-53 and 800-171 requirements cascade down through the supply chain, forcing even smaller tier-two and tier-three suppliers to implement rigorous security controls.

The Software Bill of Materials (SBOM) mandate is particularly interesting because it’s forcing manufacturers to have real visibility into their software dependencies, which is foundational for long-term supply chain security.

On the civil aviation side, DO-326a and DO-356 are pushing the industry from a compliance-checkbox approach toward continuous monitoring and threat assessment throughout the aircraft lifecycle. Zero-trust mandates across both defense and critical infrastructure are also driving architectural changes at the platform level, which aligns well with what we’re building at Lynx.

9. You’re both a technologist and a pilot. From an operator’s perspective, what would “built-in cyber resilience” mean to you sitting in the cockpit?

Patrick: It means I can trust the displays and controls to follow my inputs and trust the instruments. It means that if there’s a compromise somewhere in the system or sensor, it fails safely, perhaps with a display warning, but not with an unexpected output from the aircraft. A pilot already has enough mental load as it is and does not want to have to think or worry about cybersecurity while flying.

There’s a saying: “aviate, navigate, communicate.” Built-in resilience means the architects and engineers did their job right so that cybersecurity is invisible to me, a redundancy so the aircraft operates reliably.

10. If you could leave engineers and product teams working in critical infrastructure with one key takeaway about building secure and safe systems, what would it be?

Patrick: Know your customer’s actual problem, not just the requirement they gave you. Sometimes the requirement is a proxy for something deeper. Sometimes the customer doesn’t even know how to articulate it yet. For me, that means talking directly with customers, attending industry conferences, asking hard questions, and actively listening.

What’s your threat model today? How are you thinking about long-term sustainability? What architecture decisions are constraining you? Be honest about tradeoffs. You can’t optimize for everything, but if you understand your customer’s actual priorities, you can make product feature choices that strike the right balance.

Designing for Safety, Security, and Longevity

In aerospace and defense systems, safety and security directly overlap. The same design choices that protect flight safety also determine cyber resilience. Architectural separation, modularity, and supply chain transparency are prerequisites for survivability in the digital battlespace.

RunSafe Security and Lynx have partnered to advance this mission through technical collaboration. The integration of LYNX MOSA.ic and RunSafe Protect delivers the industry’s first DAL-A certifiable, memory-safe RTOS platform, uniting safety, security, and operational efficiency in a single solution.

Read our joint white paper for more on this partnership: Integrating RunSafe Protect with the LYNX MOSA.ic RTOS

The post Safety Meets Security: Building Cyber-Resilient Systems for Aerospace and Defense appeared first on RunSafe Security.

]]>The post Memory Safety KEVs Are Increasing Across Industries appeared first on RunSafe Security.

]]>In a webinar hosted by Dark Reading, RunSafe Security CTO Shane Fry and VulnCheck Security Researcher Patrick Garrity discussed the rise of memory safety vulnerabilities listed in the KEV catalog and shared ways organizations can manage the risk.

Memory Safety KEVs on the Rise

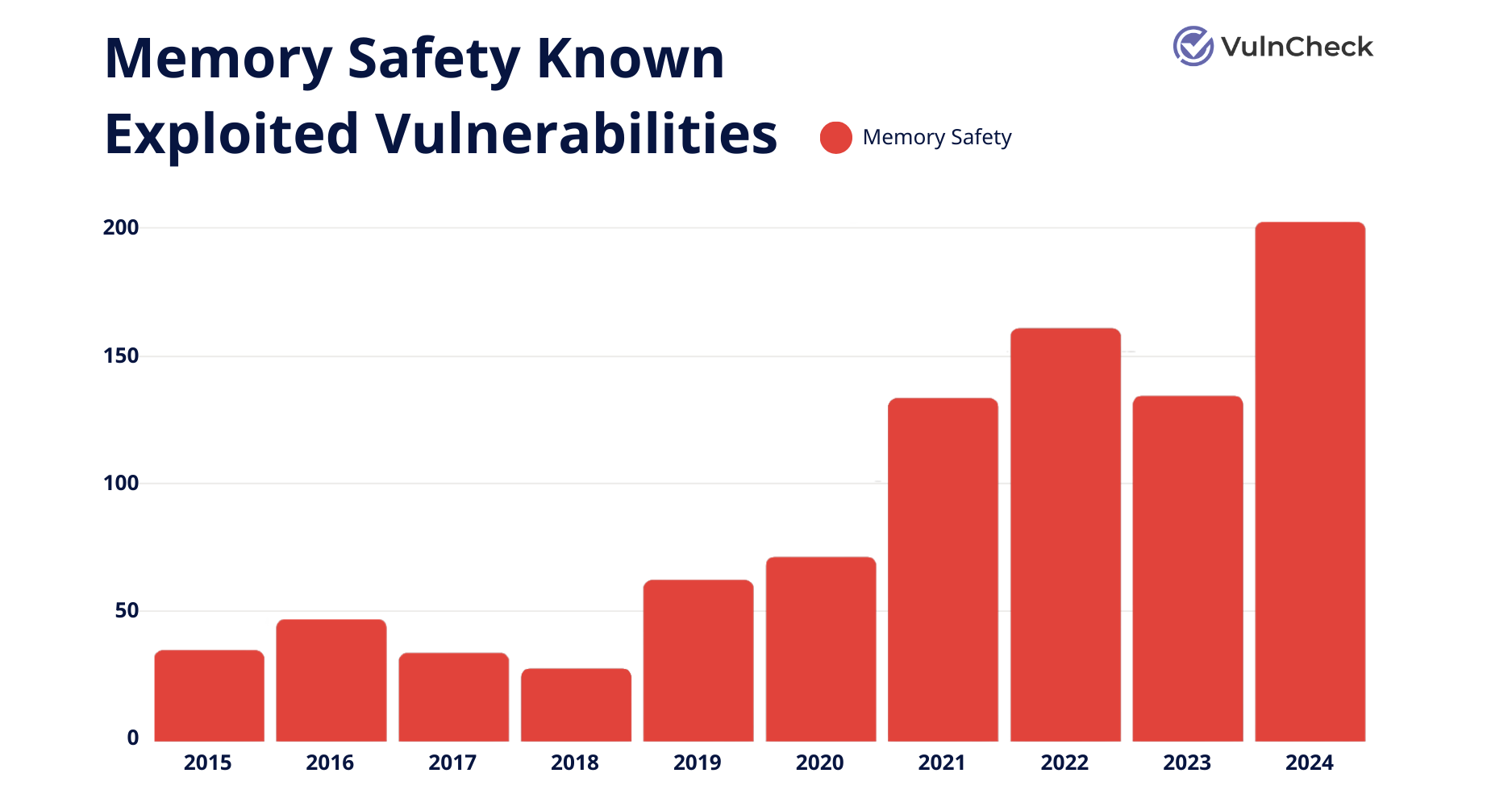

Data from VulnCheck shows a clear increase in memory safety KEVs over the years, reaching a high in 2024 of around 200 total KEVs.

“We’re seeing the number of known exploited vulnerabilities associated with memory safety grow,” Patrick said. “If you look at CISA’s KEV list, the concentration is quite high as far as volume.”

Data from VulnCheck, Memory Saftey Known Exploited Vulnerabilities

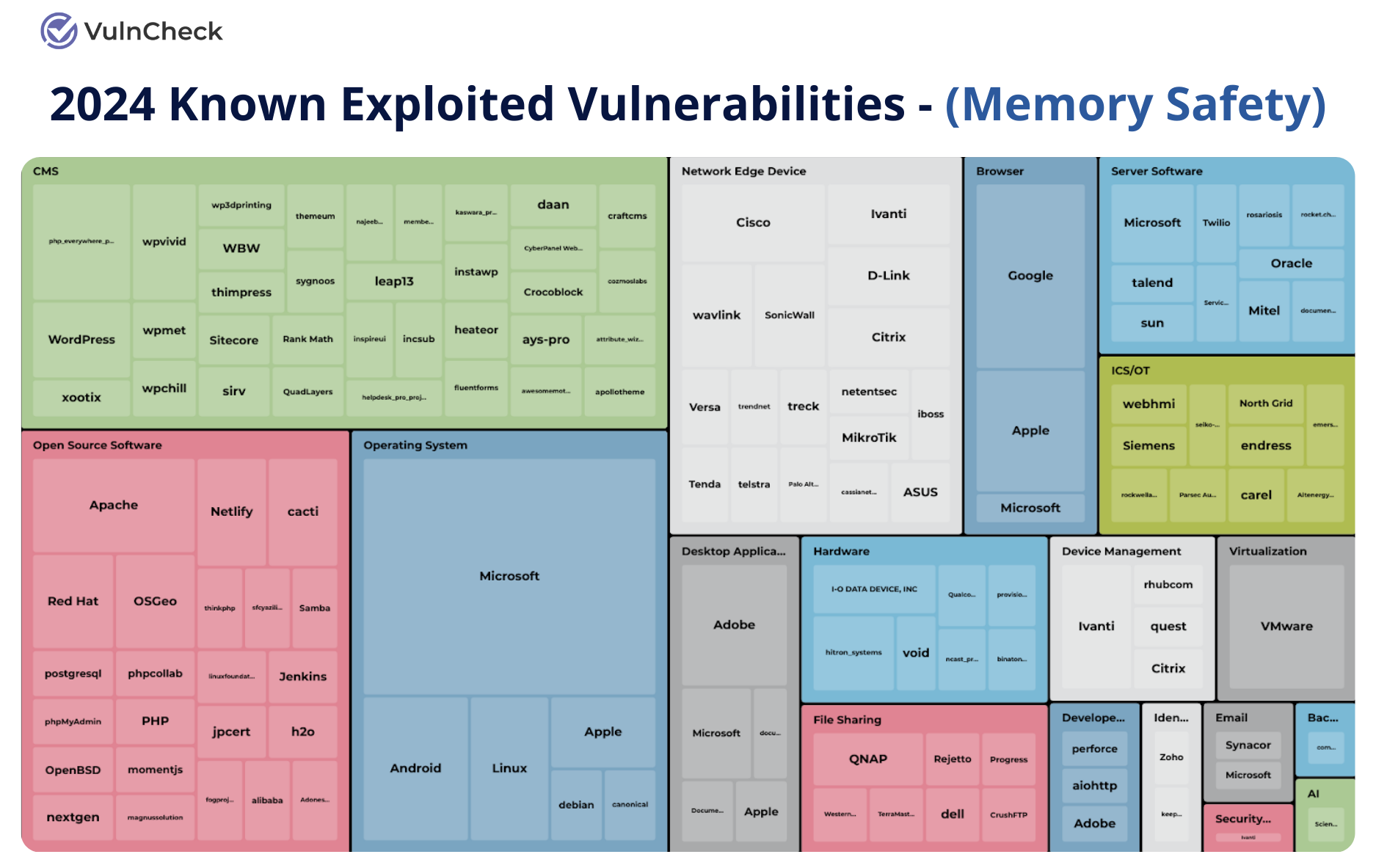

Memory safety KEVs are also found across industries, including network edge devices, hardware and embedded systems, industrial control systems (ICS/OT), device management platforms, operating systems, and open source software.

Data from VulnCheck, Memory Saftey Known Exploited Vulnerabilities by Industry

Patrick emphasized the universal nature of the threat: “If you look at this list, there’s manufacturing impacted, medical devices, embedded systems, and critical infrastructure. Across the board from an industry perspective, you’re going to see these vulnerabilities everywhere.”

There’s A Lot of KEVs, So What?

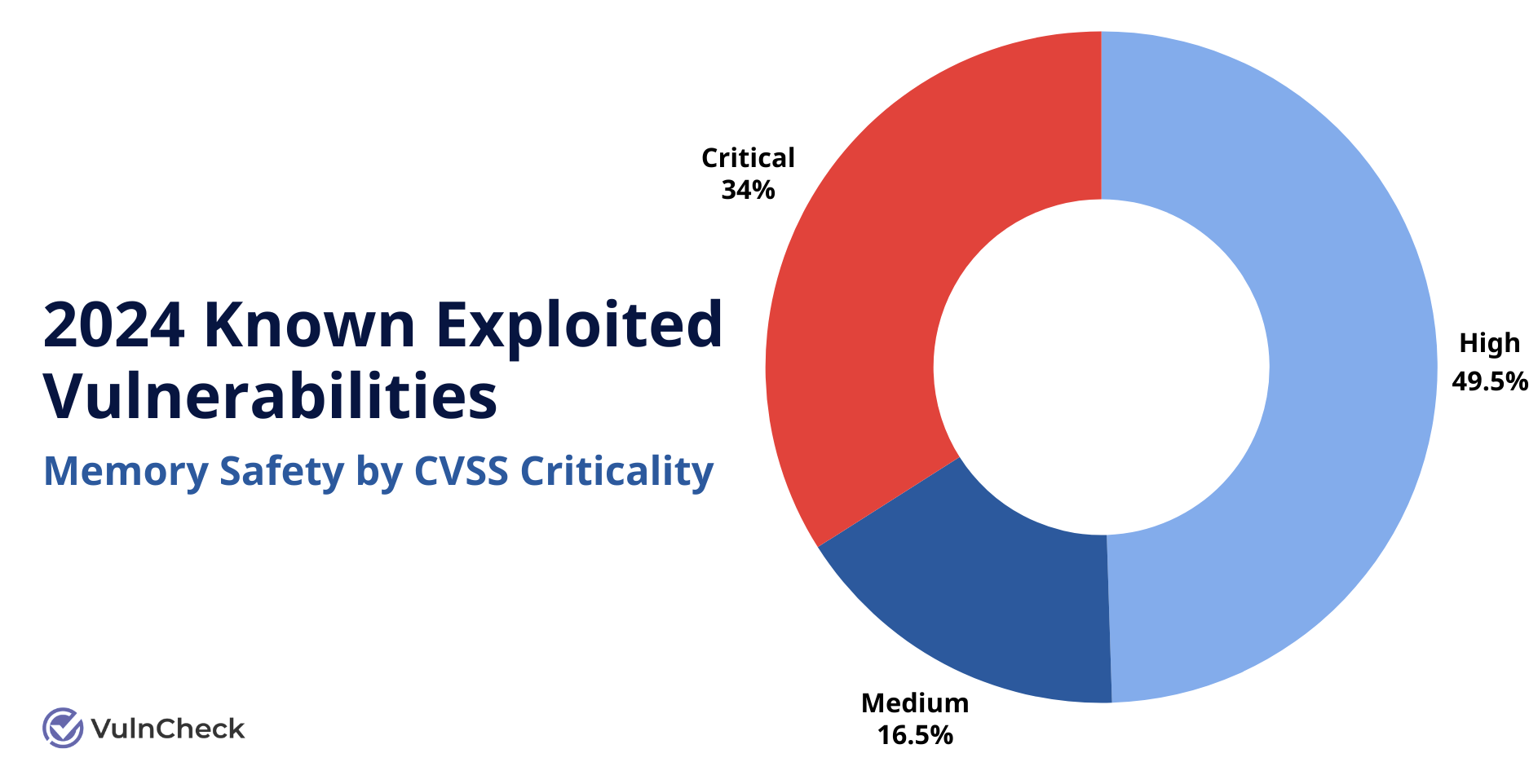

Not only are memory safety KEVs widespread, many are also classified as critical, with high CVSS scores. Six memory safety weakness types are now included in MITRE’s list of the top 25 most dangerous software weaknesses for 2024.

Data from VulnCheck, Memory Saftey Known Exploited Vulnerabilities by CVSS Criticality

Memory safety vulnerabilities—like buffer overflows, use-after-free bugs, and out-of-bounds writes—have long plagued compiled code. “About 70% of the vulnerabilities in compiled code are memory safety related,” explained Shane Fry.

When attackers exploit these bugs, the results can be severe. Organizations may face:

- Arbitrary code execution

- Remote control of devices

- Denial of service

- Privilege escalation

- Data exposure and theft

Real-World Exploits: What the Data Tells Us

Ivanti Connect Secure (CVE-2025-0282)

This KEV, an out-of-bounds write (CWE-787), affected several Ivanti products and was linked to the Hafnium threat actor group. Patrick called out the speed at which this vulnerability moved from discovery to exploitation: “The vendor identifies there’s a vulnerability, there’s exploitation, they disclose the vulnerability, they get it in a CVE, and then CISA adds it—all in the same day.”

Typically, the disclosure process does not flow so quickly, but in this case it was a good thing as the exploit targeted a security product. Shane observed: “One of the very interesting philosophical questions that I think about often in cybersecurity spaces is how impactful a security vulnerability in a security product can be. Most people think that if it’s a security product, it’s secure. And off they go.”

Siemens UMC Vulnerability (CVE-2024-49775)

A heap-based buffer overflow flaw (CVE-2024-49775) in Siemens’ industrial control systems exposed critical infrastructure to risks of arbitrary code execution and disruption. The vulnerability exemplifies the widespread impact memory safety issues can have across product lines when they affect common components.

Global Recognition of the Growing Risk

The accelerating growth of memory safety KEVs has not gone unnoticed by global security organizations. In 2022, the National Security Agency (NSA) issued guidance stating that memory safety vulnerabilities are “the most readily exploitable category of software flaws.”

Their guidance recommended two approaches:

- Rewriting code in memory-safe languages like Rust or Golang

- Deploying compiler hardening options and runtime protections

Similarly, CISA has emphasized memory safety in its Secure by Design best practices, advocating for organizations to develop memory safety roadmaps.

The European Union’s Cyber Resiliency Act (CRA) takes a broader approach, emphasizing Software Bill of Materials (SBOM) to help organizations understand vulnerabilities in their supply chain. As Shane noted, “We saw a shift in industry when the CRA became law that, hey, now we have to actually do this. We can’t just talk about it.”

Practical Steps: What Organizations Can Do Now

Given the growing threat landscape, organizations need practical approaches to address memory safety vulnerabilities.

1. Prioritize Critical Code Rewrites

For most companies, a full rewrite in Rust or another memory-safe language isn’t realistic. Instead, start by identifying high-risk, externally facing components and consider targeted rewrites. Shane suggested starting with software or devices that most often interact with untrusted data.

2. Adopt Secure by Design Practices

Implementing secure development practices can help prevent introducing new vulnerabilities.

“There’s a lot of aspects of Secure by Design, like code scanning and secure software development life cycles and Software Bill of Materials, that can help you understand what you’re shipping in your supply chain,” Shane said.

3. Use Runtime Protections

Runtime hardening is an effective defense for legacy or third-party code that can’t be rewritten. Runtime protections prevent the exploit of memory safety vulnerabilities by randomizing code to prevent attackers from reliably targeting vulnerabilities.

RunSafe accomplished this with our Protect solution. “Every time the device boots or every time your process is launched, we reorder all the code around in memory,” Shane said.

It also buys time, allowing organizations to avoid having to ship emergency patches overnight because their software is already protected.

Memory Safety Is Everyone’s Problem

Memory safety vulnerabilities are becoming more common across industries. The risks are serious, especially when attackers can use these flaws to take control of systems or steal data.

Organizations need to take action now. By rewriting the highest-risk code, following secure development practices, and using runtime protections where needed, companies can reduce their exposure to memory safety threats.

Memory safety problems are widespread, but they can be managed. Secure by Design practices and runtime protections offer a path forward for more secure software and greater resilience.

The post Memory Safety KEVs Are Increasing Across Industries appeared first on RunSafe Security.

]]>The post Understanding Memory Safety Vulnerabilities: Top Memory Bugs and How to Address Them appeared first on RunSafe Security.

]]>

Memory safety vulnerabilities remain one of the most persistent and exploitable weaknesses across software. From enabling devastating cyberattacks to compromising critical systems, these vulnerabilities present a constant challenge for developers and security professionals alike.

Both the National Security Agency (NSA) and the Cybersecurity and Infrastructure Security Agency (CISA) have emphasized the importance of addressing memory safety issues to defend critical infrastructure and stop malicious actors. Their guidance highlights the risks associated with traditional memory-unsafe languages, such as C and C++, which are prone to issues like buffer overflows and use-after-free errors.

In February 2025, CISA drilled down even deeper with their guidance, issuing an alert on “Eliminating Buffer Overflow Vulnerabilities.”

Why do memory corruption vulnerabilities still exist, how do they manifest in practice, and what strategies can organizations implement to mitigate their risks effectively? Let’s take a look.

What Are Memory Safety Vulnerabilities?

Memory safety vulnerabilities occur when a program performs unintended or erroneous operations in memory. These issues can lead to dangerous consequences like data corruption, unexpected application behavior, or even full system compromise. Common Weakness Enumeration (CWEs), a body of knowledge tracking software vulnerabilities, highlights these as some of the most severe weaknesses in software today.

Memory safety issues are inherently tied to programming languages and runtime environments. Languages like C and C++ offer control and performance but lack built-in memory safety mechanisms, making them more prone to such vulnerabilities.

Attackers leverage memory corruption vulnerabilities as access points to infiltrate systems, exploit weaknesses, and execute malicious actions. Addressing memory vulnerabilities is essential for safety and security, especially for industries like critical infrastructure, medical devices, aviation, and defense.

Types of Memory Safety Vulnerabilities

There are many different types of memory safety vulnerabilities, but there are particular ones that developers and security professionals should understand. The six explained below are listed on the 2024 Common Weakness Enumeration (CWE™) Top 25 Most Dangerous Software Weaknesses list (CWE™ Top 25), and are familiar faces on the list from previous years. The CWE Top 25 lists vulnerabilities that are easy to exploit and that have significant consequences.

1. Buffer Overflows (CWE-119)

A buffer overflow occurs when a program writes more data to a buffer than it can safely hold. This overflow can corrupt adjacent memory, potentially leading to crashes, data corruption, or even allowing attackers to execute arbitrary code.

Example of a Buffer Overflow

A notable example of a buffer overflow vulnerability is CVE-2023-4966, also known as “CitrixBleed,” which affected Citrix NetScaler ADC and Gateway products in 2023. This critical flaw allowed attackers to bypass authentication, including multi-factor authentication, by exploiting a buffer overflow in the OpenID Connect Discovery endpoint.

The vulnerability enabled unauthorized access to sensitive information, including session tokens, which could be used to hijack authenticated user sessions. Discovered in August 2023, CitrixBleed was actively exploited by various threat actors, including ransomware groups like LockBit, leading to high-profile attacks such as the Boeing ransomware incident.

This vulnerability highlights the ongoing significance of buffer overflow vulnerabilities in critical infrastructure and the importance of prompt patching and session invalidation to mitigate potential compromises

2. Heap-Based Buffer Overflow (CWE-122)

A heap-based buffer overflow occurs when a program writes more data to a buffer located in the heap memory than it can safely hold. This can lead to memory corruption, crashes, privilege escalation, and even arbitrary code execution by attackers manipulating the heap memory structure.

Example of a Heap-Based Buffer Overflow

An example of a recent critical heap-based buffer overflow is CVE-2024-38812, a vulnerability in VMware vCenter Server, discovered during the 2024 Matrix Cup hacking competition in China. With a CVSS score of 9.8, this flaw allows attackers with network access to craft malicious packets exploiting the DCERPC protocol implementation, potentially leading to remote code execution. This heap overflow vulnerability was initially patched but required a subsequent update to fully address the issue.

3. Use-After-Free Errors (CWE-416)

Use-after-free errors arise when a program continues to use a memory pointer after the memory it points to has been deallocated. This can lead to system crashes, data corruption, or exploitation through arbitrary code execution.

Example of a Use-After-Free Error

CVE-2021-44710 is a critical use-after-free UAF vulnerability discovered in Adobe Acrobat Reader DC, affecting multiple versions. The vulnerability has a CVSS base score of 7.8, indicating its high severity. If successfully exploited, an attacker could potentially execute arbitrary code on the target system, leading to various severe consequences including application denial-of-service, security feature bypass, and privilege escalation.

4. Out-of-Bounds Write (CWE-787)

An out-of-bounds write occurs when a program writes data outside the allocated memory buffer. This can corrupt data, cause crashes, or create vulnerabilities that attackers can exploit.

Example of an Out-of-Bounds Write

CVE-2024-7695 is a critical out-of-bounds write vulnerability affecting multiple Moxa PT switch series. The flaw stems from insufficient input validation in the Moxa Service and Moxa Service (Encrypted) components, allowing attackers to write data beyond the intended memory buffer bounds.

With a CVSS 3.1 score of 7.5 (High), this vulnerability can be exploited remotely without authentication. Successful exploitation could lead to a denial-of-service condition, potentially causing significant downtime for critical systems by crashing or rendering the switch unresponsive.

5. Improper Input Validation (CWE-020)

Improper input validation occurs when a system fails to adequately verify or sanitize inputs before they are processed. This flaw can lead to unintended behaviors, including command injection, buffer overflows, or unauthorized access. Attackers exploit this weakness by crafting malicious inputs, often bypassing security controls or causing system failures. Input validation issues are particularly common in web applications and embedded systems where external data is heavily relied upon.

Example of Improper Input Validation

CVE-2024-5913 is a medium-severity vulnerability affecting multiple versions of Palo Alto Networks PAN-OS software. This improper input validation flaw allows an attacker with physical access to the device’s file system to elevate privileges.

6. Integer Overflow or Wraparound (CWE-190)

Integer overflow or wraparound occurs when an arithmetic operation results in a value that exceeds the maximum (or minimum) limit of the data type, causing the value to “wrap around.” This vulnerability can lead to unpredictable behaviors, such as buffer overflows, memory corruption, or security bypasses. Attackers exploit this weakness by manipulating inputs to trigger overflows, often resulting in system crashes or unauthorized actions. This issue is common in low-level programming languages like C and C++, where integer operations are not inherently checked.

Example of an Integer Overflow

CVE-2022-2329 is a critical vulnerability (CVSS 3.1 Base Score: 9.8) affecting Schneider Electric’s Interactive Graphical SCADA System (IGSS) Data Server versions prior to 15.0.0.22074. This Integer Overflow or Wraparound vulnerability can cause a heap-based buffer overflow, potentially leading to denial of service and remote code execution when an attacker sends multiple specially crafted messages. Schneider Electric released a patch to address this vulnerability.

Memory CVEs Affecting Critical Infrastructure

Recently, nation-state actors, like the Volt Typhoon campaign, have demonstrated the potential real-world impact of memory safety vulnerabilities in the software used to run critical infrastructure.

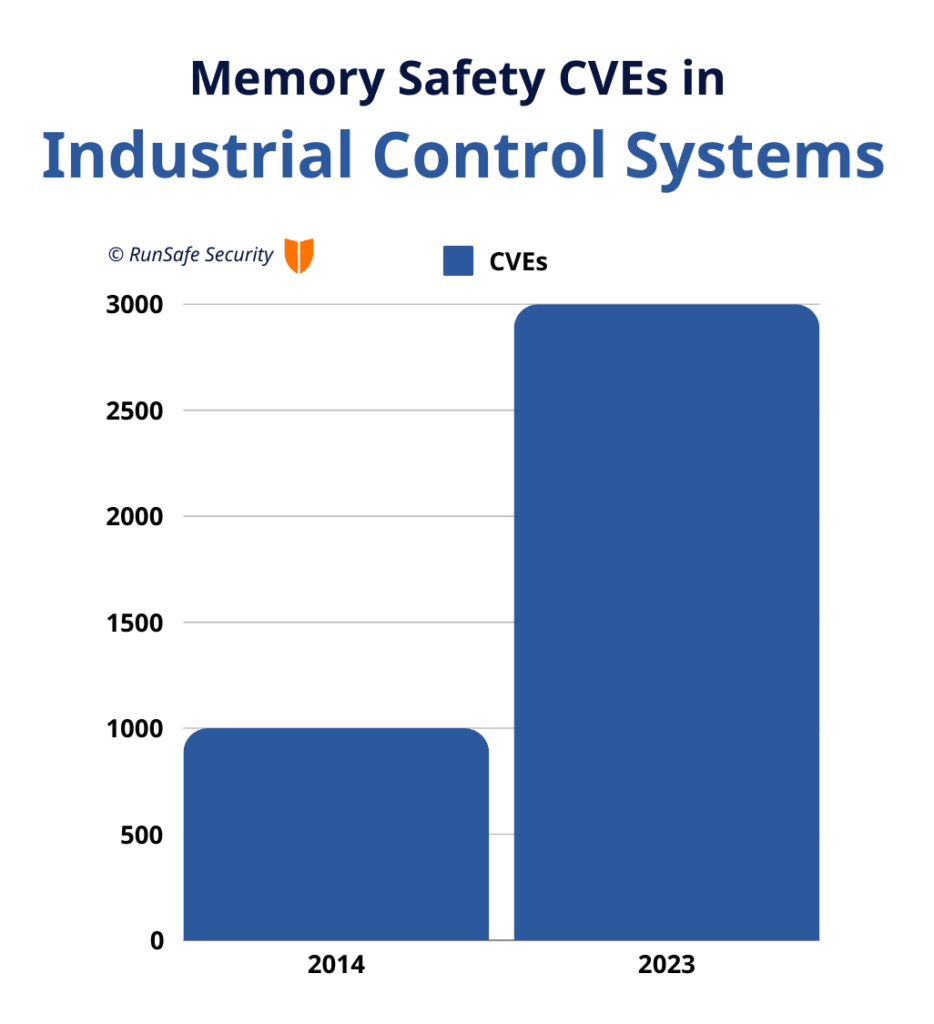

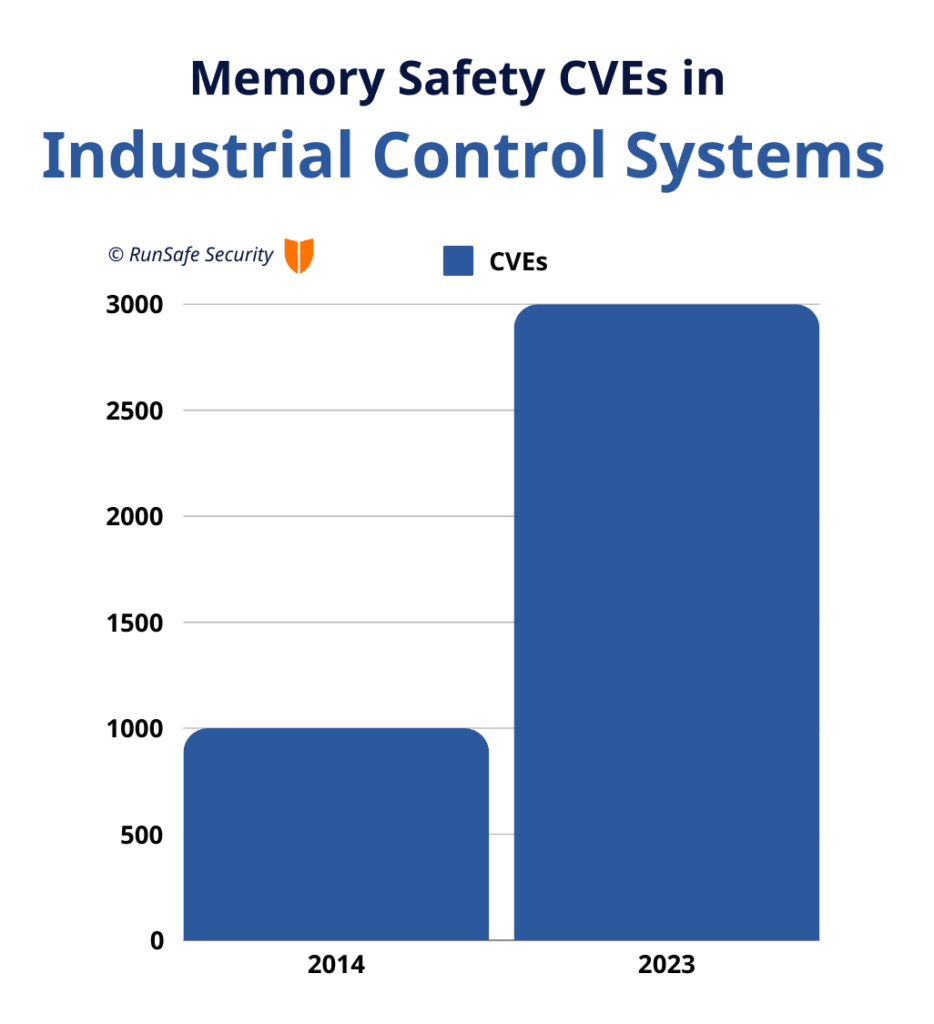

Additionally, in the last few years, memory safety vulnerabilities within ICS have seen a steady upward trend. There were less than 1,000 CVEs in 2014 but nearly 3,000 in 2023 alone.

Here are a few examples of memory safety vulnerabilities directly impacting critical infrastructure.

Ivanti Connect Secure Flaw

A zero-day vulnerability (CVE-2025-0282) in Ivanti’s Connect Secure appliances allowed remote code execution, enabling malware deployment on affected devices.

Siemens UMC Vulnerability

A heap-buffer overflow flaw (CVE-2024-49775) in Siemens’ industrial control systems exposed critical infrastructure to risks of arbitrary code execution and disruption.

Mercedes-Benz Infotainment System

Over a dozen vulnerabilities in the Mercedes-Benz MBUX system could allow attackers with physical access to disable anti-theft measures, escalate privileges, or compromise data.

Rockwell Automation Vulnerability

A denial-of-service and possible remote code execution vulnerability (CVE-2024-12372) in Rockwell Automation’s PowerMonitor 1000 Remote product. This heap-based buffer overflow could compromise system integrity.

Why Addressing Memory Vulnerabilities Is Critical

Memory vulnerabilities represent a significant share of software-based attacks. According to a study by CISA, two-thirds of vulnerabilities in compiled code stem from memory safety issues. These vulnerabilities can impact industries that depend heavily on legacy systems written in C and C++—industries like aerospace, manufacturing, and energy infrastructure.

Key Risks Posed by Memory Vulnerabilities:

- Remote Control Exploits: Attackers can hijack systems, gaining full control over operations.

- Data Breaches: Sensitive information can be corrupted, stolen, or erased.

- Operational Downtime: System instability leads to interruptions in critical services.

- Compliance Failures: Organizations risk fines for failing to meet cybersecurity regulations.

Best Practices for Mitigating Memory Vulnerabilities

Organizations can address memory safety vulnerabilities by taking proactive measures like:

- Adopting secure coding practices, implementing protections such as bounds-checking, avoiding unsafe functions, and rigorously managing dynamic memory usage.

- Using static and dynamic analysis tools and fuzz testing to detect issues during development.

- Using build-time Software Bill of Materials (SBOMs) to identify vulnerabilities and get transparency into memory safety issues in software.

- Transitioning select components to memory-safe languages using incremental migration plans where feasible.

- Employing runtime protections like runtime application self protection to prevent memory exploitation at runtime.

- Consistently apply security patches for all software and firmware components to address known CVEs quickly.

Bringing About a Memory Safe Future

RunSafe Security is committed to protecting critical infrastructure, and a major key to doing so is eliminating memory-based vulnerabilities in software. Following CISA’s guidance and Secure by Design is an important first step. However, CISA’s guidance to rewrite code into memory safe languages is impractical for companies that produce dozens or hundreds or thousands of embedded software devices, often with 10-30 year lifespans.

This is where RunSafe steps in, offering a far more cost effective and an immediate way to eliminate the exploitation of memory-based attacks. RunSafe Protect mitigates cyber exploits through Load-time Function Randomization (LFR), relocating software functions in memory every time the software is run for a unique memory layout that prevents attackers from exploiting memory-based vulnerabilities. With LFR, RunSafe prevents the exploit of 86 memory safety CWEs.

Rather than waiting years to rewrite code, RunSafe protects embedded systems today, allowing software to defend itself against both known and unknown vulnerabilities.

Interested in understanding your exposure to memory-based CVEs and zero days? You can request a free RunSafe’s Risk Reduction Analysis here.

The post Understanding Memory Safety Vulnerabilities: Top Memory Bugs and How to Address Them appeared first on RunSafe Security.

]]>The post CISA’s 2026 Memory Safety Deadline: What OT Leaders Need to Know Now appeared first on RunSafe Security.

]]>It’s for this reason, among other national security, economic, and public health concerns, that the Cybersecurity and Infrastructure Security Agency (CISA) has made memory safety a key focus of its Secure by Design initiatives.

Now, CISA is urging software manufacturers to publish a memory safety roadmap by January 1, 2026, outlining how they will eliminate memory safety vulnerabilities in code, either by using memory safe languages or implementing hardware capabilities that prevent memory safety vulnerabilities.

Though manufacturers are on the hook for the security of their products, the responsibility doesn’t fall solely on the shoulders of the manufacturers. Buyers of software in the OT sector also have an equally important role to play in addressing memory safety to build the resilience of their mission-critical OT systems against attack.

“The roadmap to memory safety is a great starting point for asset owners to talk to their suppliers, saying this is a big concern of mine, especially for my OT software,” said Joseph M. Saunders, Founder and CEO of RunSafe Security. “Then, what we’re looking for from product manufacturers is that they have a mature process to assess how to achieve memory safety.”

Why Memory Safety Should Be on Your Radar

Why all the fuss about memory safety, and why now? Memory safety vulnerabilities consistently rank among the most dangerous software weaknesses, and they are alarmingly common. Within industrial control systems, memory safety vulnerabilities have been steadily rising, growing from less than 1,000 CVEs in 2014 to nearing 3,000 in 2023 alone.

In one example, programmable logic controllers were found vulnerable to memory corruption flaws that could enable remote code execution. In the OT world, where systems control critical industrial processes, such vulnerabilities aren’t just security risks — they’re potential catastrophes waiting to happen.

Building a Memory Safety Strategy: Collaboration Between OT Software Manufacturers and Buyers Is Needed

CISA has set a clear deadline: January 1, 2026. With this date in mind, OT software manufacturers and buyers can begin to have important conversations about addressing memory safety, both for existing products written in memory-unsafe languages and for new products to be released down the line.

What should be on the agenda for discussion when building and evaluating a memory safety roadmap? Here are four key areas to look at.

1. Vulnerability Assessments

Start with a comprehensive Software Bill of Materials (SBOM) to identify and prioritize memory-based vulnerabilities in OT software. Think of it as a detailed inventory that helps you:

- Identify existing vulnerabilities

- Map your software supply chain

- Pinpoint products most at risk from memory-based vulnerabilities

2. Smart Remediation Planning

Once vulnerabilities are identified, manufacturers should take next steps to eliminate them. OT software buyers can discuss with manufacturers about remediation options like:

- Prioritizing addressing systems with high exposure and potential impact

- Evaluating options for rewriting legacy code in memory-safe languages, like Rust

- Considering proactive solutions such as Load-time Function Randomization (LFR) for existing systems when code rewrites are not practical

3. Future-Proofing Your Products

Software buyers should discuss with their suppliers how they are incorporating memory safety into their product lifecycle planning.

Look ahead by:

- Integrating memory safety into your product roadmap

- Taking advantage of major architectural changes to implement memory-safe languages

- Deploying software memory protection for existing code

4. Building Strong Partnerships

A memory safety roadmap is a great opportunity for software manufacturers and buyers to open up conversations about memory safety and collaborate to find a path forward. When considering working with a supplier, evaluate their willingness to

- Establish regular communication channels

- Transparently track progress

- Demonstrate a shared commitment to security goals

Moving Forward with CISA’s Memory Safety Guidance

By working together, software buyers and manufacturers can not only meet CISA’s memory safety mandate but also build more resilient OT systems.

“All asset owners should do a study with their suppliers to understand the extent to which they are exposed to memory safety vulnerabilities,” Saunders said.

From there, software manufacturers can build a roadmap to tackle the memory safety challenge once and for all.

Learn more about how RunSafe Security protects critical infrastructure and OT systems from memory-based vulnerabilities.

The post CISA’s 2026 Memory Safety Deadline: What OT Leaders Need to Know Now appeared first on RunSafe Security.

]]>